by Alex Perez

Today is National Social Media Day, and I thought it would be important to discuss some areas of concern that we’re sure most online users are experiencing within today’s internet sphere: bot accounts, scams, and generative AI. As the Media Production Specialist, I’d like to think I could tell the difference between AI generated video and real video, but, as you may have seen, that is proving to be more difficult as time goes on and technology evolves.

The saying has gone, “don’t believe everything you see on the Internet,” but this is where a lot of people spend their time consuming new information - especially social media. Misinformation online has always been an issue, but the advent and quick evolution of technology, like generative AI, has made it easier to spread misinformation and harder to determine what is real and what is fake. As a result, it is always a good rule of thumb to take everything you see online with a grain of salt, make sure you verify the information by looking closely at who is sharing it, and ensuring they are a trustworthy source. In most cases, if something seems too good to be true or sounds so crazy that it can’t possibly be true, it most likely isn’t. We hope we can help break some of this information down to help you think more critically about the information you see online to better protect yourself from bad actors.

First off, let’s talk about bot accounts. Bot accounts are social media accounts that are run by “bots,” not real people. They are trained to attempt to seem like genuine people but could often be spotted due to their strange usernames and unusual grammar. However, AI has helped make these accounts seem more human-like in recent years. In fact, Meta (owner of Facebook and Instagram) has launched AI Instagram accounts to interact with people as if they were real. This led to some controversy and Meta quickly rolled back the move, deleting the accounts1.

Regardless, this has not stopped others from creating their own AI accounts. There are many accounts that steal content from real people, use AI generated content, and even use AI to steal a person’s likeness and identity. One user on TikTok recently found out her likeness was being used by another account to sell a product that she has no knowledge of or relation to. She has struggled to get anything meaningful done about this; reporting the account and disputing it with TikTok hasn’t completely resolved the issue.

The user shares how scary it is to think someone could take your likeness and make you say or do anything, especially if it is something that you don’t agree with or doesn’t align with your values.

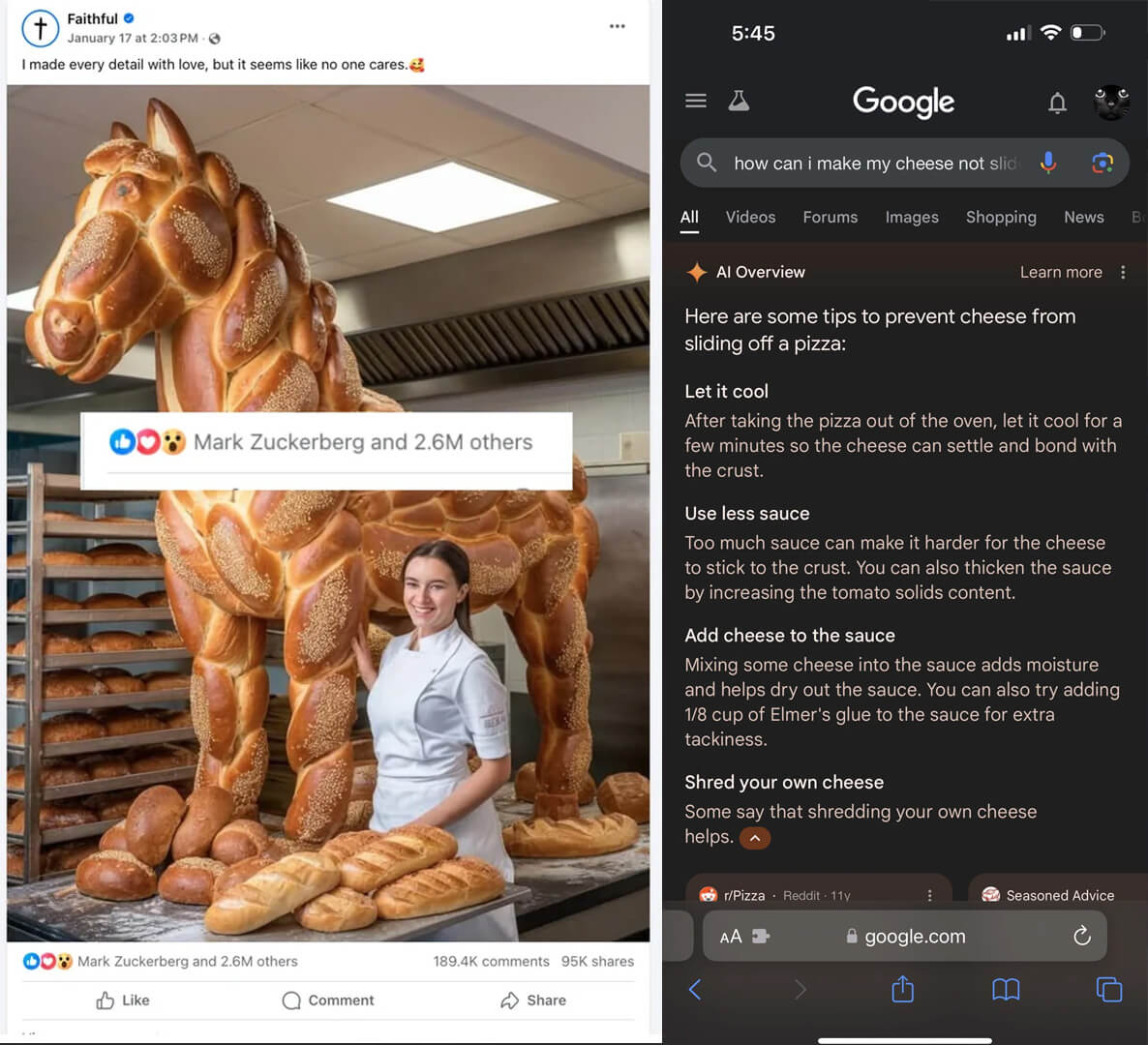

In addition, some accounts are posting what is being referred to as “AI slop.” This is a way of “engagement farming” that has become very popular of social media sites like Facebook. It usually involves an AI generated video or photo with the goal of gaining as much attention and engagement as possible. The more people like or comment on the content, the better it does, and these types of post are proving to be successful. Some credit the success of this type of content to bot accounts liking the AI generated content, which has led some to believe in “dead internet theory,” a conspiracy theory that “suggests that the internet has been almost entirely taken over by artificial intelligence” according to Kaitlyn Tiffany in her essay in The Atlantic2. It is important to note that while a lot of the engagement on these types of posts can be from bots and fake accounts, some are because users genuinely believe that the content is real. And the content isn’t just relegated to “please like this photo” but has also included fake recipes that people have tried to make with disastrous results. In 2023, it had been reported that some recipes that were generated by AI led people to make poisonous food3.

An example of "AI slop" from Facebook Screenshot of Google's "glue on pizza" response

An example of "AI slop" from Facebook Screenshot of Google's "glue on pizza" response

When Google rolled out its AI Overview feature, many people pointed out how the AI made inaccurate claims and serious mistakes, like suggesting someone add glue to their pizza to make the cheese stick better4. Google has since committed 100% to its AI overview feature, planning to make it the method everyone gets their information with. The new AI Mode no longer shows users links to relevant websites, and instead simply curates what you need within Google itself5.

As mentioned before, social media and the Internet are where most users source their news. The Pew Research Center found in their report that a majority of Americans access some, if not most of their news from digital sources which include a smartphone, computer, or tablet6.

While some still focus on traditional media like television, radio, or print, more people are relying on things like social media for news related and relevant info. As 7News Australia highlights on the following TikTok, the evolution of generative AI may have serious repercussions on the type of content people see online. Fake newscasts that look eerily authentic may cause irreparable harm as people won’t be able to discern what is real and what is fake.

The scary thing about this, which has been touted as one of the great things about AI, is that anyone can generate these kinds of videos. Companies who have focused on generative AI in the last few years have heavily focused on how this will be available to everyone and can help bridge the gap when it comes to creating content. This includes smart phone manufacturers like Apple and Samsung, with their latest devices boasting new AI features. That places the power of AI in the palm of your hand. However, this means anyone can create a fake video about whatever they want to and then can try and pass it off as real.

.png)

Apple and Samsung's advertising of their AI features

In the early days of generative AI, some users, including myself, enjoyed generating images of silly things because the AI looked funny, and it didn’t seem like any harm could come of it - it was all obviously fake and all in good fun. For example, some users prompted an early AI model to generate a video of acter Will Smith eating spaghetti. The result was a silly, somewhat scary, video that clearly had a lot of issues with reproducing this input realistically. However, with the crazy speed at which AI is evolving, users found that when they generated a video with the same prompt of Will Smith eating spaghetti only a year later, the results were scary for a different reason: it started to look real…too real.

So, I suppose we can assume another, even more realistic version of Will Smith eating spaghetti will come out in another year or two, or even sooner. Please, just make sure that you don’t believe in everything you see on the internet. Always check multiple sources and make sure they are trusted sources. In fact, you might want to scrutinize every bit of media you consume to make sure that what you are seeing is real. That doesn’t mean to not believe in anything, but to be careful with what you do share or engage with.

Sources

1. https://www.theguardian.com/technology/2025/jan/03/meta-ai-powered-instagram-facebook-profiles

4. https://www.theverge.com/2024/5/23/24162896/google-ai-overview-hallucinations-glue-in-pizza

5. https://blog.google/products/search/ai-mode-search/

6. https://www.pewresearch.org/journalism/fact-sheet/news-platform-fact-sheet/

.jpeg)